Big-Data Processing Optimization: 100x Speed & 10x Cost Reduction

Transformed advertising platform infrastructure by migrating from MySQL to GCP BigQuery, achieving 100x processing speed improvement and 10x cost reduction with containerized Python pipeline and Airflow orchestration.

The Client

The advertising platform startup had reached an inflection point: they had product-market fit, strong early customer traction, and ambitious expansion plans, but their technical infrastructure couldn’t scale. The company provided sophisticated advertising analytics and campaign optimization tools—advanced cross-channel attribution modeling, predictive customer lifetime value calculations, and real-time bidding optimization recommendations.

The business opportunity was substantial with plans to increase their client base tenfold within the year. However, their existing data infrastructure was already struggling under current load, showing concerning signs of strain that threatened this growth trajectory.

The Challenge

The core technical problem was architectural: the platform was built on MySQL, a traditional relational database not designed for the big-data analytical workloads advertising platforms require. When the system needed to calculate attribution models across millions of impression events, MySQL would grind through queries for hours while delivering frustratingly slow results.

Performance issues directly impacted customer experience—data freshness lagged hours behind real-time, and dashboard queries would timeout. Cost efficiency was spiraling wrong: over-provisioned infrastructure with expensive database instances, yet performance remained suboptimal. The system had become brittle, with minor load spikes triggering cascading failures. Scaling to 10x clients on the current architecture would result in catastrophic failure.

Our Solution

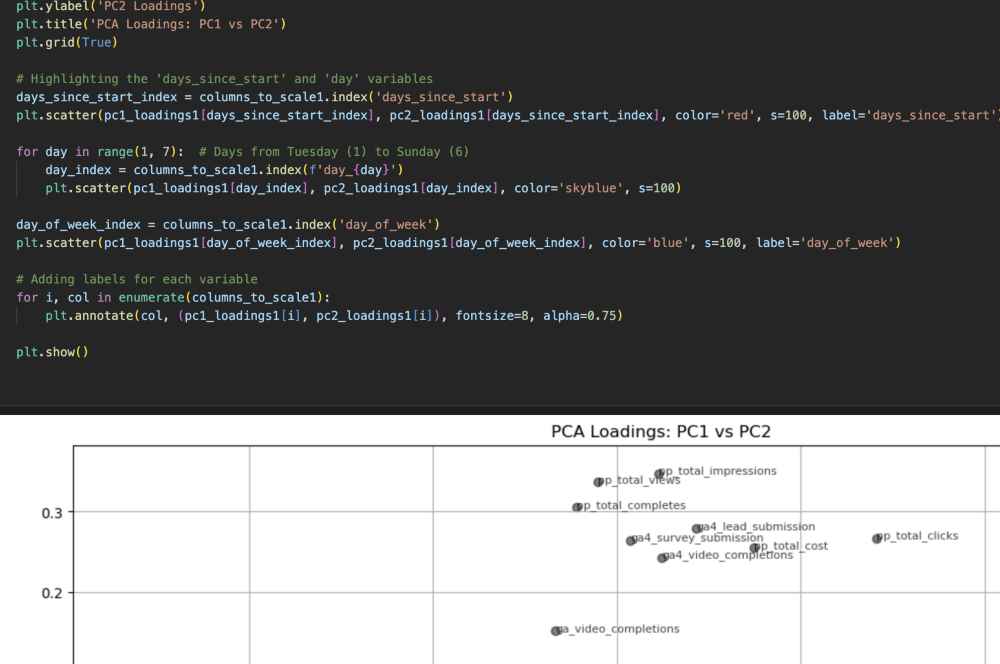

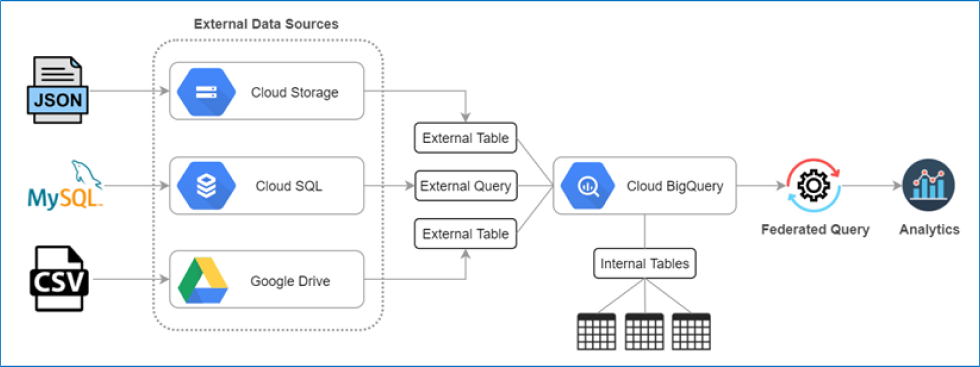

The transformation centered on migrating from MySQL to Google Cloud Platform’s BigQuery—a distributed data warehouse designed for massive-scale analytical workloads. We began with comprehensive analysis of the existing infrastructure, mapping tables, documenting query patterns, and identifying bottlenecks.

We translated MySQL schemas to BigQuery table structures optimized for analytical performance, using column-oriented storage, date partitioning, and clustered tables. Queries were rewritten to leverage BigQuery’s distributed processing. We built a complete Python-based data pipeline containerized with Docker, and implemented Apache Airflow for orchestration—providing dependency management, retry logic, monitoring dashboards, and alerting.

The Impact

Query performance improved by 100x—processing that consumed hours now completed in seconds. Despite delivering 100x better performance, infrastructure cost decreased 10x through BigQuery’s pay-per-query model. The new architecture could easily handle 10x more clients without degradation.

Operational stability improved substantially—the fragile MySQL system was replaced with robust cloud-native architecture. The engineering team shifted from database firefighting to product development. The transformation positioned the company as a technical leader, with instant query results becoming a competitive differentiator in sales conversations.