Long-Term Memory AI Chatbot: Enhanced RAG for Financial Services

Transformed a rudimentary AI chatbot MVP into a production-grade conversational system for a stealth financial startup, implementing multivector retrieval, re-ranking, and map-reduce memory summarization.

The Client

The stealth financial startup was building a conversational AI platform for the financial advisory space—a domain where conversations are inherently long-form, context-dependent, and high-stakes. Unlike consumer chatbots that handle simple Q&A, financial advisory conversations span extended sessions covering portfolio strategy, risk tolerance, regulatory considerations, and market analysis. Users expected the system to remember prior discussions, build on established context, and provide increasingly informed guidance over time.

The founding team had built a rudimentary MVP with basic RAG (Retrieval-Augmented Generation) capabilities, demonstrating enough promise to validate market demand. But the MVP had critical limitations: responses frequently missed relevant context from the knowledge base, conversations lost coherence after a few exchanges, and there was no systematic way to measure or improve response quality. They needed a technical partner to transform the prototype into a production-grade system.

The Challenge

Three interconnected problems needed solving. First, response relevance: the existing RAG pipeline used basic vector similarity search, which often retrieved tangentially related chunks instead of the most pertinent information. In financial advisory, imprecise retrieval didn’t just produce weak answers—it could surface misleading or contradictory information, eroding user trust.

Second, retrieval accuracy: the knowledge base contained diverse document types—regulatory filings, market analyses, product documentation, historical Q&A—each requiring different retrieval strategies. A single embedding approach couldn’t capture the varied semantic structures, leading to inconsistent retrieval quality across document types.

Third, long-term memory: financial advisory relationships develop over multiple sessions spanning weeks or months. Users would reference previous conversations (“Last time we discussed my risk exposure to tech stocks…”) and expect the system to recall and build on prior context. The MVP had no memory beyond the current conversation window—every session started from scratch, forcing users to re-establish context repeatedly.

Our Solution

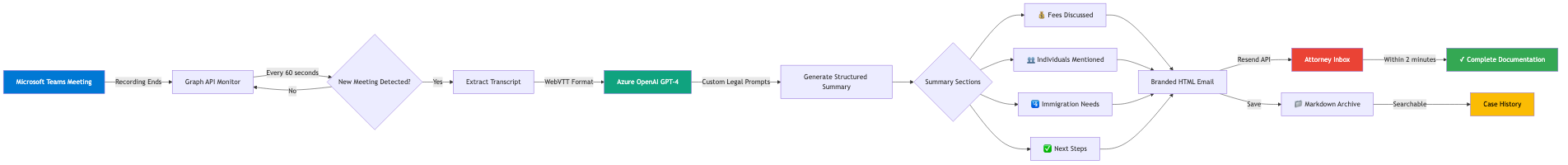

We rebuilt the conversational pipeline across all three dimensions. For response relevance, we implemented advanced prompt engineering using few-shot prompting and chain-of-thought techniques. Few-shot examples demonstrated the expected response format and depth for different query types. Chain-of-thought prompting guided the model through explicit reasoning steps: identify the user’s core question, determine what knowledge base information is needed, synthesize retrieved context with conversational history, and formulate a coherent response.

For retrieval accuracy, we replaced the basic vector search with a multivector retrieval system. Each document was indexed using multiple representation strategies—summary embeddings for high-level matching, chunk embeddings for detail retrieval, and hypothetical question embeddings for query-aligned matching. Retrieved results passed through a re-ranking layer that scored relevance against the full conversational context, not just the latest message. This dramatically reduced false-positive retrievals.

For long-term memory, we designed a multi-dimensional map-reduce summarization system. As conversations extended, the system periodically summarized the dialogue along multiple salient dimensions—topics discussed, user preferences expressed, decisions made, questions asked, and action items identified. These dimensional summaries were stored and retrieved in subsequent sessions, giving the model rich context about the user’s history without exceeding token limits. The map-reduce approach scaled to arbitrarily long conversation histories.

We also built automated evaluation infrastructure: custom AI models that systematically scored response quality across relevance, accuracy, completeness, and coherence dimensions. This replaced subjective manual review with quantitative measurement, enabling data-driven iteration on prompts, retrieval parameters, and memory strategies.

The Impact

The enhanced system delivered measurable improvements across all three dimensions. Response relevance increased substantially—users received answers that directly addressed their questions with appropriate depth and context from the knowledge base. The multivector retrieval with re-ranking eliminated the “close but not quite” retrieval failures that had plagued the MVP, ensuring the model worked with the most pertinent information available.

Long-term memory transformed the user experience from transactional interactions into genuine advisory relationships. Users could reference prior conversations naturally, and the system would recall relevant context—previous risk assessments, portfolio preferences, regulatory concerns discussed weeks earlier. This continuity was the feature that most clearly differentiated the product from generic chatbot interfaces.

The automated evaluation framework gave the team a systematic approach to continuous improvement. Rather than relying on anecdotal user feedback, they could measure the impact of every prompt adjustment, retrieval parameter change, or memory strategy update. This data-driven development cycle accelerated iteration speed and provided concrete metrics for investor conversations about product quality and trajectory.