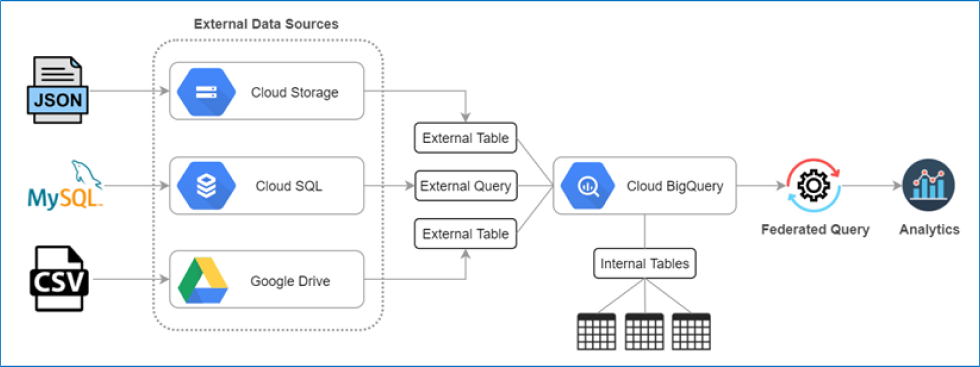

Data Engineering

Big-Data Processing Optimization: 100x Speed & 10x Cost Reduction

- January 1, 1

- Posted by

- BigQuery, GCP, Data Engineering, Python, Docker, Airflow, Infrastructure Optimization, Cost Reduction

The Client

The advertising platform startup had reached an inflection point: they had product-market fit, strong early customer traction, and ambitious expansion plans, but their technical infrastructure couldn’t scale. The company provided sophisticated advertising analytics and campaign optimization tools—advanced cross-channel attribution modeling, predictive customer lifetime value calculations, and real-time bidding optimization recommendations.

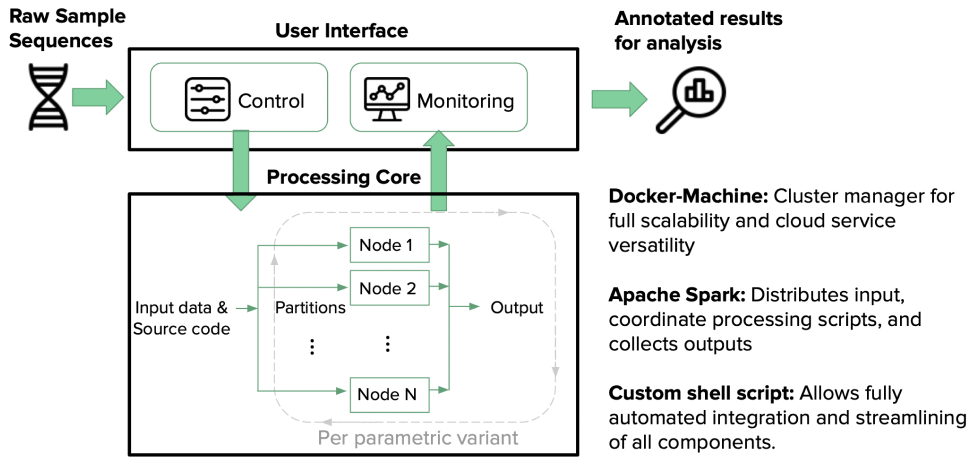

Scalable Genomic Processing: 1,000x Speed Improvement with Distributed Computing

- January 1, 1

- Posted by

- Genomics, Apache Spark, PySpark, Distributed Computing, Healthcare, Data Engineering, Visualization

The Client

The immuno-sequencing startup spun out of a premier academic institution with a focused mission: accelerate genomic research by making large-scale immuno-sequence analysis accessible and fast. Their work centered on analyzing immune system repertoires—the billions of unique receptor sequences that define how an organism fights disease. This analysis is fundamental to vaccine development, cancer immunotherapy, and autoimmune disease research.